The AI industry faces an unexpected challenge as it continues to grow exponentially. Tech mogul Elon Musk has recently warned that we’ve reached what he calls “peak data” – a point where human-generated content available for training AI models has essentially been exhausted. This claim raises fundamental questions about the future trajectory of artificial intelligence development and potential alternatives.

The data scarcity threatening AI advancement

Technology giants like Google, OpenAI, and Meta have invested billions in generative AI systems that require massive datasets for training. However, these valuable human-created datasets are rapidly depleting, according to Musk’s assessment. He believes we reached this critical “peak data” threshold in early 2024, forcing companies to seek alternative methods for improving their models.

This warning wasn’t unexpected in industry circles. OpenAI co-founder Ilya Sutskever predicted this limitation back in 2022, highlighting the inevitable exhaustion of quality training data. The research institute Epoch published findings that same year with a sobering timeline:

- Text-based training data would likely be depleted between 2023-2027

- Visual content might remain viable until approximately 2060

- Audio and specialized data face similar constraints

The quality of AI models directly correlates with the diversity and freshness of their training data. Without new human-generated content, AI systems risk stagnation or performance deterioration over time, potentially undermining recent technological breakthroughs and limiting future innovation.

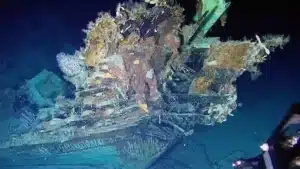

The record for the largest treasure of all time has just been confirmed at $17.4 billion, but two countries are fighting over the rights to the discovery

In 2019, Iceland Approved the 4-Day Workweek: Nearly 6 Years Later, All Forecasts by Generation Z Have Come True

Synthetic data: the controversial solution

As natural data sources dwindle, the tech industry has pivoted toward synthetic data – artificial content generated by existing AI models to train newer systems. Musk endorses this approach, joining companies like Microsoft, OpenAI, Anthropic, and Meta that already incorporate synthetic data into their AI training regimens.

Industry estimates suggest that approximately 60% of data used for AI training in 2024 was artificially generated rather than human-created. This shift offers several advantages:

| Benefits of Synthetic Data | Potential Risks |

|---|---|

| Reduced privacy concerns | Loss of connection to human reality |

| Lower collection/processing costs | Amplification of existing biases |

| Unlimited generation capacity | Decreased model diversity |

| Customizable for specific scenarios | Risk of “model collapse” |

However, this shift introduces significant concerns about AI reliability and diversity. The most alarming is what experts term “model collapse” – a degradation cycle where AI systems trained primarily on synthetic data begin to amplify their own limitations and biases.

“140 Trillion Times Earth's Oceans”: NASA Discovers Massive Water Reservoir 12 Billion Light‑Years Away

Hiker Hears Cry For Help On A Mountain And Solves A Months-Long Mystery

Finding balance in the post-peak data era

Despite these challenges, technology companies continue integrating synthetic data into their development pipelines. Recent models including Microsoft’s Phi-4, Google’s Gemma, and Anthropic’s Claude 3.5 Sonnet already utilize artificially generated content for improvements.

The critical challenge facing the industry is striking an appropriate balance between synthetic and real-world data sources. Regulatory frameworks and technical safeguards will be essential to prevent over-reliance on artificial data while maintaining innovation momentum.

Several strategies are being explored to address these concerns:

- Developing validation protocols for synthetic data quality

- Creating hybrid training approaches that preserve real data connections

- Exploring entirely new architectures that require less training data

- Establishing industry standards for data diversity and quality

The decisions made today regarding “peak data” and AI training methodologies will shape technological development for decades. The fundamental question remains: should we prioritize rapid advancement through synthetic data, or establish stricter guardrails to preserve AI diversity and reliability? As Musk’s warning reverberates through the industry, finding this balance becomes increasingly urgent for sustainable AI progress.